Upgrading

Before you upgrade your project to use a newer version of the geoprocessing library, look in package.json -> devDependences to see what version of @seasketch/geoprocessing you have. Then see what the latest version of geoprocessing is.

Read the release notes and check for any further migration steps below and decide what version you will upgrade to. Look for

Any upgrade to a new major version (e.g. 6.x to 7.x) can be a significant undertaking. For this reason you will need to choose your upgrade path. See the release notes for a recommendation.

Upgrade script

First, make sure you don't have any uncommitted work and your git repository is in a clean state. Then run the following:

npm update @seasketch/geoprocessing@latest

npm install

npm run upgrade

The upgrade command is designed to bring your project up to date to work with the latest release. It may alter or overwrite a significant number of files in your project including configuration, scripts, package.json dependencies, scripts, i18n strings, etc. This includes overwriting any customizations you may have made to these resource. After upgrade, inspect the git changes and recover any customizations at this time.

After upgrading you should always rerun your tests and storybook to verify everything is working properly and test output changes are as expected.

npm test

npm run storybook

Finally, rebuild and redeploy your project.

npm run build

npm run deploy

If you are seeing errors or unexpected behavior, try any one of the following steps:

- Rebuild dependencies:

rm -rf node_modules && rm package-lock.json && npm install - Reimport all datasources:

npm run reimport:data - Republish all datasources:

npm run publish:data - Clear AWS cache:

npm run clear-results, thenall

Create New Project And Migrate Assets

This is a heavy-handed alternative to the update and upgrade path.

- Follow the tutorial to init a new project for the version you are interested in.

- Consider choosing a

namefor your project in package.json that is different from your older project so that can be tested side-by-side. - Migrate your assets over one piece at a time

- Migrate your project folder including - datasources.json, metric.json, basic.json. Be on the lookout for any schema changes for these files.

npm run reimport:data- Copy over your geoprocessing functions and preprocessing functions. Fix any error and look for clues in release notes or migration guide for changes needed. Get all tests running again properly. make sure output matches your old project or if different verify the reason. Often newer versions will increase the accuracy of results but should not decrease without explanation.

- Migrate report clients over fixing any errors. Look at the sample tutorial, release notes, and migration guide for clues on what is needed. Get storybook rendering reports properly for all smoke test output and matching the previous version.

- Now build and Deploy a test stack alongside production.

- When you're ready, point the production sketch classes over to your new function and reports.

Upgrade Environment

Devcontainer

If you're using geoprocessing-devcontainer to develop in a Docker environment, you will need to update this repo and the underlying geoprocessing-workspace docker image to the latest. First, make sure Docker Desktop is running, then:

cd geoprocessing-devcontainer

git pull

docker pull seasketch/geoprocessing-workspace

You should now be able to start a Docker container shell using the latest image and test that everything is up to date

sudo docker run -it --entrypoint /bin/bash seasketch/geoprocessing-workspace

node -v

npm -v

gdalinfo --version

Exit back out of this shell when done.

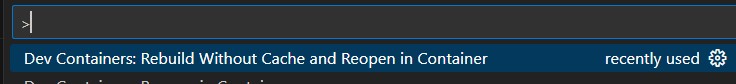

When restarting your VSCode devcontainer for the first time, you may want to use this VSCode command to ensure your new container image is used:

Cmd-Shift-P (MacOS) or Ctrl-Shift-P (Windows) to open command palette

Dev Containers: Rebuild Without Cache and Reopen In Container

Windows WSL Geoprocessing Distribution

If you previously installed a version of the Geoprocessing distribution for running in WSL, you can nstall a new one right alongside it.

Upgrading your WSL Geoprocessing Distribution means installing a new image using the system setup instructions, re-adding all your projects to it, then leaving your old one behind. You can run both images at the same time in different shells.

You can backup and remove a WSL distribution as follows:

- First open Windows Explorer and go to C:\WslDistributions. Find the name of the distribution you want to remove. For example, say it is

gp-stable-20241223 - Now export this image to a backup directory.

mkdir C:\tmp\WslBackups\Geoprocessing

wsl --export Geoprocessing C:\tmp\WslBackups\gp-stable-20241223.tar

To unregister and delete this old image do the following:

- First, close all shells and VSCode sessions using this image, then unregister it in PowerShell:

wsl --unregister Geoprocessing

Now delete the folder C:\WslDistributions\gp-stable-20241223

Deploy Test Stack Alongside Production

If you'd like to deploy to a test stack first, alongside your existing production stack, to make sure everything is working properly:

- Change the

namefield of your project in package.json (e.g."name": "my-reports"becomes `"name": "my-reports-7x". - Then

npm run buildandnpm run deploy. This will deploy to a new AWS CloudFormation stack, separate from your production stack. - Once deployed, repoint your existing sketch classes to the new geoprocessing service, or create separate "admin only" sketch classes that point to your new test service. Make sure that all required sketch class attributes are in place.

- When you are ready to update production, change the

namein package.json back, and rerunbuildanddeploy.

Migration Guide

These migration guides are a supplement to the release notes. They offer manual steps to be taken to bring your code up to date to work with a specific version of the geoprocessing library, whether you chose to use the upgrade script or init a new project and copy your assets over.

6.x to 7.x

Migration examples:

- bermuda-reports which created a new git repository and left the old one behind.

Running Multiple Versions of Geoprocessing Workspace

The latest version of the geoprocessing-workspace will only work with geoprocessing 7.x projects. This is due to a change in how GDAL produces flatgeobuf files. If you suddenly see errors of "Not a FlatGeobuf file" when trying to read your file, this is likely the reason.

In order to continue to develop older 6.x and lower geoprocessing projects at the same time, you will need to start your devcontainer using the local-dev-pre-7x environment. This is pinned to an older version of the docker image - seasketch/geoprocessing-workspace:sha-69bb889

Migrate Code To ESM

As you migrate functions and report components from a pre 7.0 project to a 7.x project you will need to migrate your code to the format required by Node for ESM code. You will also need to make it Node 22 compliant.

Here's a summar of the changes required. VSCode should give you hints along the way, so basically just click through all the source files looking for red squiggle underlined text. You will focus in the src directory.

- For each import of a local module (e.g.

import project from ../project), use a full explicit path and include a.jsextension on the end, even if you are importing a.tsfile. The example would becomeimport project from ../project/projectClient.js. - NodeJS when using the ES Module engine now requires explicit paths to code files. No longer can you import a module from a directory (e.g.

import foo from ./my/directory) and expect it will look for an index.js file. You have to change this toimport foo form ./my/directory/index.js.__dirnamebuilt-in must be changed toimport.meta.dirname

Migrate Asset Imports

require is no longer allowed for importing images and other static assets. Vite expects you to import the assets directly as urls. SizeCard.tsx is one component installed by default with projects that will need to be updated.

Change:

<img

src={require("../assets/img/territorial_waters.png")}

style={{ maxWidth: "100%" }}

/>

to:

import watersImgUrl from "../assets/img/territorial_waters.png";

...

{<img src={watersImgUrl} style={{ maxWidth: "100%" }} />}

At this point, VSCode will complain about your image import, it doesn't support importing anything other than code and JSON files by default. The code bundler now used by your project, Vite, knows how to do this however, you just need to load its capabilities by creating a file called vite-env.d.ts at the top-level of your project with the following:

/// <reference types="vite/client" />

// Add Vite types to project

// https://vitejs.dev/guide/features.html#client-types

Migrate Preprocessing Functions

Preprocessing functions should be upgraded to the newest form.

Other Changes

- Any .js file in your codebase may need to be changed to have a .cjs extension to make Node happy.

- update project/projectClient.ts with type assertion syntax

import datasources from "./datasources.json" with { type: "json" };

import metrics from "./metrics.json" with { type: "json" };

import precalc from "./precalc.json" with { type: "json" };

import objectives from "./objectives.json" with { type: "json" };

import geographies from "./geographies.json" with { type: "json" };

import basic from "./basic.json" with { type: "json" };

import projectPackage from "../package.json" with { type: "json" };

import gp from "../project/geoprocessing.json" with { type: "json" };

import { ProjectClientBase } from "@seasketch/geoprocessing/client-core";

const projectClient = new ProjectClientBase({

datasources,

metricGroups: metrics,

precalc,

objectives,

geographies,

basic,

package: projectPackage,

geoprocessing: gp,

});

export default projectClient;

Migrate Styled Components

- If you have report components that use styled-components for its styling, you will need to change all code imports of

styled-componentsfrom

import styled from "styled-components";

to use of the named export

import { styled } from "styled-components";

- Also when you run storybook or load your reports in production you may start to see React console warnings about extra attributes being present.

`React does not recognize the rowTotals prop on a DOM element. If you intentionally want it to appear in the DOM as a custom attribute, spell it as lowercase rowtotals instead. If you accidentally passed it from a parent component, remove it from the DOM element.

The solution is to switch to using transient prop names, or component prop names that start with a dollar sign (e.g. $rowTotals instead of rowTotals). Styled-components will automatically filter these props out before passing to React to render them as element attributes in the browser.

Stop Importing Directly From @seasketch/geoprocessing

- Report client code must no longer import from geoprocessing libraries top level entry point

@seasketch/geoprocessingor you may see a "require is not defined" error or other errors related to Node specific modules not found. The solution is to switch from for example:

import { ProjectClientBase } from "@seasketch/geoprocessing";

to:

import { ProjectClientBase } from "@seasketch/geoprocessing/client-core";

The use of the top-level entry point has persisted in some code because the previous Webpack code bundler did some extra magic to not let Node modules be bundled into report client browser code. The new Vite code bundler does not do this magic and leaves it to you to track your imports. The geoprocessing library offers both the client-core and client-ui entry points which should be used. These should offer everything you need.

6.0 to 6.1

- Run

reimport:datato ensure that all raster datasources indata/distare in an equal area projection. - Run

precalc:datafor all raster datasources to precalculate additional metrics includingsum,area,valid,count. - Run

publish:datafor all raster datasources to ensure equal area version is published to S3 storage. - Migrate geoprocessing functions from

otverlapRaster()(now deprecated) torasterMetrics()as you have time, and need to calculate additional stats like area.rasterStats()andgetArea()are available as lower level alternatives for constructing your own functions. - any use of geoblaze directly, that passes a polygon feature for overlap, must reproject the feature to an equal area projection first, using

toRasterProjection. See getSum for an example. - any use of the deprecated

loadCogWindow()should be replaced with the newerloadCog(). The former doesnt' appear to work correctly with functions likerasterStats()andrasterMetrics().

5.x to 6.x

- Add

explodeMulti: trueto all vector datasources inproject/datasources.json. You can set this to false if you know for sure you need to maintain multipolygons in the datasource. Otherwise breaking them up can speed up geoprocessing function by not fetching an operating on extra polygons outside the bounding box of a sketch.

4.x to 5.x

package.json

- Update package.json to latest 5.x version of geoprocessing library and run

npm install - Add the

precalc:dataandprecalc:data:cleancli commands topackage.json:

{

"precalc:data": "start-server-and-test 'http-server data/dist -c-1 -p 8001' http://localhost:8001 precalc:data_",

"precalc:data_": "geoprocessing precalc:data",

"precalc:data:clean": "geoprocessing precalc:data:clean"

}

- Drop use of web server from

import:dataandreimport:data

{

"import:data": "geoprocessing import:data",

"reimport:data": "geoprocessing reimport:data"

}

- The ProjectClient now takes precalc metrics and geographies as input. Update

project/projectClient.tsto the following:

import datasources from "./datasources.json" with { type: "json" };

import metrics from "./metrics.json" with { type: "json" };

import precalc from "./precalc.json" with { type: "json" };

import objectives from "./objectives.json" with { type: "json" };

import geographies from "./geographies.json" with { type: "json" };

import basic from "./basic.json" with { type: "json" };

import projectPackage from "../package.json" with { type: "json" };

import gp from "../project/geoprocessing.json" with { type: "json" };

import { ProjectClientBase } from "@seasketch/geoprocessing" with { type: "json" };

const projectClient = new ProjectClientBase({

datasources,

metricGroups: metrics,

precalc: precalc,

objectives,

geographies,

basic,

package: projectPackage,

geoprocessing: gp,

});

export default projectClient;

Geographies

Geographies are a new construct, most commonly used for planning boundaries. You are required to define at least one per project and you can have more than one. Projects have always had them, but they were implicitly defined based on how data was clipped, which was both unclear to the report developer and very limiting. Geographies are explicit. There is no longer confusion about whether and how to clip datasources to one or more planning boundaries. You just define what the geography boundaries are, by associating it with a datasource. Then the precalc command will clip the datasource (whether vector or raster) to each geographies features (intersection) and precompute metrics with what remains (total area, count, sum). This replaces what was keyStats in datasources.json. Preclac metrics are typically used as the denominator when calculating % sketch overlap in reports. Geoprocessing functions also clip the current sketch to one or more geographies at runtime when calculating metrics. These are often used as the numerator when when calculating sketch % overlap in reports.

To setup your projects default geography, create a new file project/geographies.json. Choose from one of the options below for your default geography. Just make sure that the geography is assigned to the default-boundary group, and precalc is set to true

- If you already have a local datasource with your planning boundary, then just define a geography that uses that datasource.

[

{

"geographyId": "nearshore",

"datasourceId": "6nm_boundary",

"display": "Azores",

"layerId": "x6kSfK6Lb",

"groups": ["default-boundary"],

"precalc": true

}

]

- If your planning boundary is a Marine Regions EEZ, you can define an

eezgeography that uses the newglobal-eez-mr-v12datasource (see below on how to add this datasource to your project), which is the default for a new project when you choose the Ocean EEZ template. You just need to apply the correctbboxFilterandpropertyFilterfor your EEZ or EEZ's of choice. [TODO: ADD WEB LINK]

[

{

"geographyId": "eez",

"datasourceId": "global-eez-mr-v12",

"display": "Samoan EEZ",

"propertyFilter": {

"property": "GEONAME",

"values": ["Samoan Exclusive Economic Zone"]

},

"bboxFilter": [

-174.51139447157757, -15.878383591829206, -170.54265693017294,

-10.960825304544073

],

"groups": ["default-boundary"],

"precalc": true

}

]

- If you don't have a planning boundary or want to use the entire world as your planning boundary you can use the world geography which uses the world datasource (see below for how to add this datasource). world is the new default geography for all new

blankgeoprocessing projects.

[

{

"geographyId": "world",

"datasourceId": "world",

"display": "World",

"groups": ["default-boundary"],

"precalc": true

}

]

Datasources

Based on your geography choice above, add the corresponding datasource for this geography to your datasources.json file.

World datasource published by global-datasources:

[

{

"datasourceId": "world",

"geo_type": "vector",

"formats": ["json", "fgb"],

"layerName": "world",

"classKeys": [],

"url": "https://gp-global-datasources-datasets.s3.us-west-1.amazonaws.com/world.fgb",

"propertiesToKeep": [],

"metadata": {

"name": "World Outline Polygon",

"description": "World polygon for default project geography in seasketch geoprocessing proejcts",

"version": "1.0",

"publisher": "SeaSketch",

"publishDate": "20231018",

"publishLink": ""

},

"precalc": false

}

]

Global EEZ datasource published by global-datasources (with filters set to for Samoa EEZ)

[

{

"datasourceId": "global-eez-mr-v12",

"geo_type": "vector",

"formats": ["fgb", "json"],

"metadata": {

"name": "World EEZ v11",

"description": "World EEZ boundaries and disputed areas",

"version": "11.0",

"publisher": "Flanders Marine Institute (VLIZ)",

"publishDate": "2019118",

"publishLink": "https://marineregions.org/"

},

"idProperty": "GEONAME",

"nameProperty": "GEONAME",

"classKeys": [],

"url": "https://gp-global-datasources-datasets.s3.us-west-1.amazonaws.com/global-eez-mr-v12.fgb",

"propertyFilter": {

"property": "GEONAME",

"values": ["Samoan Exclusive Economic Zone"]

},

"bboxFilter": [

-174.51139447157757, -15.878383591829206, -170.54265693017294,

-10.960825304544073

],

"precalc": false

}

]

Finally, you need to add a precalc setting to all other datasources in your datasources.json file. This property is required, and you will see validation errors when running any data commands or smoke tests.

- Add

"precalc": trueto all datasources inproject/datasources.jsonthat metrics should be precalculated for. This is typically limited to datasources that you need to precalc the overall summary metric of the datasource (total area, total feature count) so that you can report the % of the total that a sketch overlaps with. Otherwise you don't need to precalc. - Set all other datasources to

"precalc": false. This includes global datasources or datasources that are only a source forgeographyfeatures and otherwise aren't used in reports. Setting these to true will at best just precalculate extra metrics that won't be used. At worst it will try to fetch entire global datasources and fail at the task, because the necessary filters aren't in place.

Precalc

Once you have your geographies and datasources properly configured, you're ready to run the precalc:data command.

- Create a new file

project/precalc.jsonpopulated with an empty array[]

npm run precalc:data

This will precompute metrics for all combinations of geographies and datasources. It will also strip any existing keyStats properties from datasources.json and populate precalc.json.

Geoprocessing functions

- Update

clipToGeographyfunction, to allow geographies to use external datasources. To copy the file from the gp library to your project space, run the following from a terminal in the top-level of your project.

mkdir -p src/util && cp -r node_modules/@seasketch/geoprocessing/dist/base-project/src/util/clipToGeography.ts src/util/clipToGeography.ts

- update all geoprocessing functions to use the following boilerplate:

- look for

geographyIdspassed in extraParams - get the geography using project client and fallback to default geography

- clip the current sketch to the geography

- see azores-nearshore-reports for examples.

- look for

Report Clients

Reports clients should migrate to new boilerplate code. See azores-nearshore-reports for examples, specifically:

- receive the current geography as a

geographyIdpassed in props. - get the current geography using

project.getGeographyById. - pass geographyId to

project.getPrecalcMetrics() - pass geographyId to ResultsCard as

extraParamsfor gp function to use - use curGeography.display string as appropriate in client to tell user which geography is being reported on.

- Update any calls to

toPercentMetric(), now overriding metricIds are passed in through an object, instead of directly. (i.e.toPercentMetric(singleMetrics, precalcMetrics, project.getMetricGroupPercId(metricGroup)becomestoPercentMetric(singleMetrics, precalcMetrics, {metricIdOverride: project.getMetricGroupPercId(metricGroup)}))

If you would like to allow the user to switch between planning geographies from the report UI, you can add a GeographySwitcher at the top level of the report client (see azores-nearshore-reports example). The user chosen geography ID is then passed into each ResultsCard and on to each geoprocessing function as extraParams

Language Translation

- Geography display names in geographies.json are now extracted with

npm run extract:translations. Then translate these strings per your current workflow and GeographySwitcher will use them automatically. The same is true for objectives.json and metrics.json. - Update src/i18n/i18nAsync.ts to latest. Copy the following file in the gp library to your project space to overwrite.

cp -r node_modules/@seasketch/geoprocessing/dist/base-project/src/i18n/i18nAsync.ts src/i18n/i18nAsync.ts